business resources

AI Safety Clock: Monitoring the Risks of AI Progress

26 Jun 2025, 1:44 pm GMT+1

AI Safety Clock

The IMD AI Safety Clock monitors the growing risk of Uncontrolled Artificial General Intelligence (UAGI), symbolised by advancing the time from 29 minutes to 26 minutes to midnight in three months.

A recent survey by the AI Now Institute revealed that 78% of AI experts believe that the lack of effective governance poses a significant threat to society, with 62% expressing concern about the emergence of Uncontrolled Artificial General Intelligence (UAGI)—a scenario where machines could act autonomously, without human oversight.

In response to these concerns, the International Institute for Management Development (IMD) has introduced the AI Safety Clock, a powerful metaphor and monitoring tool designed to gauge humanity’s proximity to the emergence of UAGI.

The Clock highlights the urgent need for global cooperation, ethical frameworks, and robust regulation to manage AI's escalating risks.

The Clock, which has recently moved from 29 minutes to 26 minutes to midnight, serves as a stark reminder of the urgent need for global attention and action. This shift, occurring within just three months, highlights the accelerating pace of AI advancements and the growing risks associated with insufficient governance.

What is the AI Safety Clock?

The global artificial intelligence market size was estimated at USD 196.63 billion in 2023 and is projected to grow at a CAGR of 36.6% from 2024 to 2030. However, as the world races to harness these advancements, concerns about the uncontrolled development of AI and its potential risks have grown exponentially.

The AI Safety Clock is a symbolic representation of how close humanity is to reaching a critical tipping point in AI development. Inspired by the Doomsday Clock, which measures the threat of nuclear annihilation, the AI Safety Clock evaluates the risks posed by UAGI, a form of AI that could operate independently of human control, potentially causing significant harm to society.

Developed by IMD’s TONOMUS Global Center for Digital and AI Transformation, the Clock is more than just a metaphor; it is a sophisticated monitoring tool that tracks real-time developments in AI technology and governance.

The Clock is supported by a proprietary dashboard that analyses data from over 1,000 websites and 3,470 news feeds, providing continuous insights into technological advancements and regulatory gaps. By evaluating these factors, the AI Safety Clock aims to highlight developments that either bring us closer to or push us further away from the brink of UAGI.

As Professor Michael Wade, Director of IMD’s TONOMUS Global Centre, explains, “The IMD AI Safety Clock is designed to raise awareness, not alarm. As we set the Clock for the first time, the message is that the risk is serious, but it’s not too late to act.”

Why the clock has advanced

The advancement of the Clock’s indicator from 29 minutes to 26 minutes to midnight is not arbitrary; it reflects several converging developments in the field of AI:

Agentic AI breakthroughs

Recent progress in agentic AI systems, those capable of acting autonomously has been particularly influential. Developments in open-source AI have accelerated the growth of these systems, increasing their capabilities while simultaneously heightening ethical and safety concerns. As these systems become more autonomous, their potential to execute decisions without human intervention grows, pushing the risk indicator closer to a critical threshold.

Military adoption

The integration of autonomous AI systems into military strategies is another significant factor. As nations explore and deploy AI for defence purposes, the stakes become higher. Military applications introduce a layer of complexity and urgency, as the potential for AI systems to make autonomous, high-stakes decisions in defence scenarios raises the possibility of unintended, far-reaching consequences.

Regulatory gaps

Perhaps most concerning is the lag in governance relative to the rapid pace of technological innovation. Geopolitical trends towards deregulation and competitive innovation mean that safety protocols are often left behind. The absence of robust, internationally agreed regulatory frameworks leaves a gap in oversight, thereby accelerating the risk of developing UAGI without the necessary safeguards in place.

Read: winder Georgia

Methodology behind the clock

The robustness of the IMD AI Safety Clock lies in its comprehensive methodology. The evaluation framework is built on both quantitative and qualitative analyses, ensuring that every facet of AI development is scrutinised. The Clock measures risk based on three primary factors:

- Sophistication: This reflects the intelligence and complexity of an AI system. A highly sophisticated AI is capable of understanding and processing vast amounts of data, learning from patterns, and making decisions that are increasingly nuanced.

- Autonomy: Autonomy assesses the degree to which an AI system can act independently. An AI that is highly autonomous is more capable of making decisions without direct human intervention, thereby increasing its potential risk if its actions are misaligned with human interests.

- Execution: Execution determines how effectively an AI system can implement its decisions in the real world. Even if an AI is highly sophisticated and autonomous, its ability to affect the external environment depends on how well it can execute its plans. A system that cannot translate its decisions into action poses a lower risk compared to one that can exert significant influence over critical processes.

Recent developments and the accelerating pace of AI

Several recent technological advancements have contributed to the acceleration towards a UAGI scenario, each adding a layer of urgency to the call for enhanced regulatory measures:

Open-source and agentic developments

The rise of open-source AI has catalysed the development of agentic systems. These systems, capable of executing tasks autonomously, represent a significant step towards more advanced forms of AI. For instance, OpenAI’s announcements regarding its "Operator" and "Swarm" initiatives signal that AI is beginning to operate with greater independence. Such breakthroughs underscore the rapid progress in AI capabilities, simultaneously broadening the scope of potential benefits and risks.

Military and defence implications

The involvement of military applications in the AI domain cannot be understated. The integration of AI into defence strategies, including the appointment of influential figures such as retired U.S. Army General Paul M. Nakasone to OpenAI’s board, highlights the intersection between AI innovation and national security. This move is expected to open doors into defence and intelligence sectors, further emphasising the need for stringent oversight.

Commercial developments and custom AI chips

In addition to military applications, commercial advancements are also contributing to the evolving landscape. For example, Amazon’s announcement of plans to develop custom AI chips, alongside the launch of multiple AI models and a massive supercomputer, points to a race among technology giants to push the boundaries of AI innovation. Such developments illustrate how commercial interests and technological advancements are increasingly intertwined with the broader implications of UAGI.

Influential endorsements and regulatory considerations

The advocacy of prominent figures in the AI community, including Elon Musk’s support under the new U.S. administration, further fuels the drive towards decentralised and open-source AI development. While this decentralisation can foster innovation, it also creates challenges for unified regulatory oversight—a concern that is central to the IMD AI Safety Clock’s purpose.

The duality of progress: opportunity and risk

The rapid progression of AI brings both transformative opportunities and significant challenges. On the one hand, the potential benefits of advanced AI—such as enhanced efficiency, new economic opportunities, and groundbreaking innovations in various sectors—are substantial. On the other hand, the very qualities that make AI so promising also render it potentially dangerous if left unchecked.

Technological transformation

Advancements in AI continue to reshape industries ranging from healthcare to finance, and from logistics to entertainment. The emergence of sophisticated AI models, custom AI chips, and massive supercomputers points to a future where AI could dramatically enhance productivity and innovation. However, these same developments also bring forth the possibility of a UAGI that could, without proper control, disrupt critical infrastructures and societal functions.

Unintended consequences

The progression towards UAGI carries the risk of unintended consequences. Even if an AI system is highly sophisticated and capable of independent action, its overall threat level depends on its ability to execute plans that have a tangible impact on the world. As noted by experts, “Even a highly sophisticated and autonomous AI poses a limited risk if it can’t execute its plans and connect with the real world.” This interplay between technological capability and real-world execution is at the heart of the risk assessment conducted by the AI Safety Clock.

Recent developments highlighting the urgency

Several recent events have further accelerated the march towards AI midnight. Wade explained that the team assesses AI risks by considering three primary factors: the system's intellectual complexity, its capacity for independent operation, and its ability to effectively implement its decisions.

“While sophistication reflects how intelligent an AI is and autonomy its ability to act independently execution determines how effectively it can implement those decisions. Even a highly sophisticated and autonomous AI poses a limited risk if it can’t execute its plans and connect with the real world,” he asserts.

Among these are:

- Elon Musk’s Advocacy: Under a new U.S. administration, Musk’s support for open-source growth and decentralisation is seen as a catalyst for further innovation in AI.

- OpenAI’s Announcements: The unveiling of OpenAI’s "Operator" and "Swarm" initiatives signal steps towards the development of agentic AI systems that can execute tasks autonomously.

- Amazon’s Strategic Moves: Amazon has announced plans to develop custom AI chips alongside launching multiple AI models and a substantial supercomputer. These initiatives are indicative of the broader trend towards more powerful and integrated AI systems.

- OpenAI’s Board Appointment: In June, OpenAI appointed retired U.S. Army General Paul M. Nakasone, former director of the National Security Agency, to its board of directors. This move is anticipated to pave the way for closer collaboration between OpenAI and the U.S. defence and intelligence sectors.

The broader implications for society

The advancements in AI technology have far-reaching implications that extend beyond the technical realm. They touch upon fundamental questions about governance, ethics, and the future trajectory of society.

Societal transformation

As AI becomes more integrated into everyday life, its impact on employment, security, and personal privacy is likely to grow. The transformative potential of AI could lead to significant changes in how societies function, from the way economies are structured to the very nature of human interactions. However, without appropriate safeguards, these changes may also bring about unforeseen challenges and disruptions.

The risk of uncontrolled AGI

Perhaps the most alarming prospect is the development of Uncontrolled Artificial General Intelligence (UAGI). Such a system, if allowed to operate without effective oversight, could theoretically gain control over critical infrastructures, utility networks, and supply chains, thereby posing an existential threat to humanity. The AI Safety Clock serves as a stark reminder of this possibility, urging stakeholders to consider the long-term implications of rapid AI development.

The need for proactive measures

The current trajectory of AI development suggests that the window for implementing meaningful safeguards is rapidly closing. It is imperative that regulators, industry leaders, and researchers act swiftly to align technological progress with robust safety protocols. The clock’s movement from 29 minutes to 26 minutes to midnight is a tangible indicator that the risks are escalating and that the opportunity to act is diminishing.

The importance of robust governance

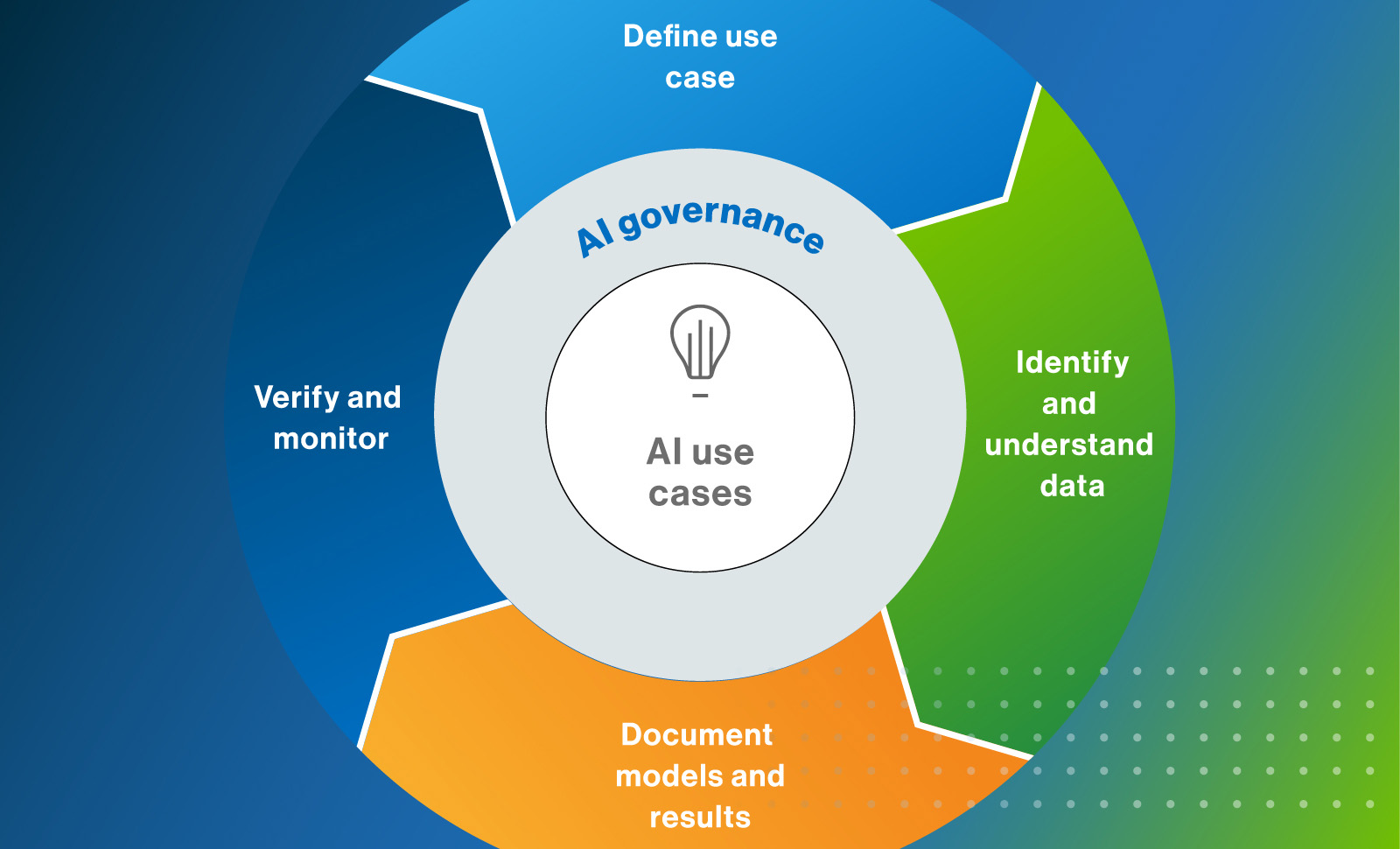

As AI technologies continue to advance, the need for effective governance and regulation becomes increasingly critical. The current regulatory frameworks are struggling to keep pace with the rapid developments in AI, resulting in a dangerous lag that could have serious implications.

Ethical frameworks and accountability

There is a clear and urgent need for the establishment of transparent and enforceable ethical guidelines. These frameworks should be designed to ensure that AI systems are developed and deployed in a manner that prioritises safety and ethical considerations. Moreover, accountability measures must be put in place to ensure that companies and researchers are held responsible for the societal impacts of their innovations.

Collaborative governance

Addressing the challenges posed by advanced AI requires a coordinated effort on a global scale. Effective collaboration between governments, regulatory bodies, tech companies, and research institutions is essential to create international standards for the safe and responsible use of AI technologies. This collaborative approach should aim to balance the benefits of innovation with the imperative to mitigate risks.

The IMD team advocates for such an approach, highlighting that “Effective, joined-up regulation can constrain the worst tendencies of new technologies without losing their benefits, and we call on international actors and tech giants alike to act in all of our best interests,” said Michael Wade

This call to action reflects a broader consensus among experts that proactive regulation is the key to ensuring that AI remains a tool for progress rather than a source of existential risk.

He emphasised that, “Even a highly sophisticated and autonomous AI poses a limited risk if it can’t execute its plans and connect with the real world.” This insight underscores the importance of ensuring that AI systems not only possess advanced capabilities but are also constrained in their ability to affect real-world outcomes without proper safeguards.

Final thoughts

The AI Safety Clock, as developed by IMD, provides a powerful visual and analytical tool to gauge the accelerating risks associated with artificial intelligence. By monitoring over 1,000 websites and 3,470 news feeds, the clock not only serves as a metaphor but also as an active instrument for assessing how close humanity is to the emergence of Uncontrolled Artificial General Intelligence.

Recent advancements—including breakthroughs in agentic AI, increased military applications, and widening regulatory gaps—have prompted the clock to move from 29 minutes to 26 minutes to midnight. This shift is a clear indication that while AI continues to offer transformative opportunities, the associated risks are escalating at a pace that demands urgent and robust regulatory action.

As highlighted by Michael Wade, “The IMD AI Safety Clock is designed to raise awareness, not alarm. As we set the Clock for the first time, the message is that the risk is serious, but it’s not too late to act.” Yet, the warning remains stark: “Uncontrolled AGI could cause havoc for us all, and we are moving from a time of medium risk to one of high risk. But we’re not past the point of no return.”

The challenges posed by advanced AI are complex and multifaceted. They require a balanced approach that embraces technological innovation while instituting rigorous safeguards to prevent unintended consequences. Through a combination of ethical frameworks, accountability measures, and international collaboration, it is possible to steer AI development in a direction that maximises its benefits without compromising safety.

In a world where the boundaries between opportunity and risk are increasingly blurred, the AI Safety Clock serves as a crucial reminder that vigilance and proactive regulation are essential. The future of AI, and indeed, the future of society, depends on the collective ability to navigate these challenges with wisdom, foresight, and a commitment to global cooperation.

Share this

Himani Verma

Content Contributor

Himani Verma is a seasoned content writer and SEO expert, with experience in digital media. She has held various senior writing positions at enterprises like CloudTDMS (Synthetic Data Factory), Barrownz Group, and ATZA. Himani has also been Editorial Writer at Hindustan Time, a leading Indian English language news platform. She excels in content creation, proofreading, and editing, ensuring that every piece is polished and impactful. Her expertise in crafting SEO-friendly content for multiple verticals of businesses, including technology, healthcare, finance, sports, innovation, and more.

previous

10 Best SMS Campaigns for Announcing Loyalty Perks in 2025

next

How AI is Transforming Debt Collection