business resources

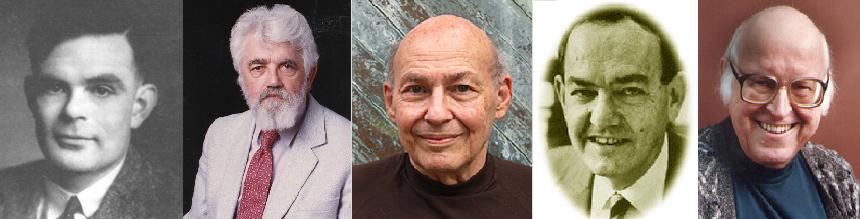

The Fathers Behind The AI Evolution

28 Sept 2023, 9:11 am GMT+1

AI couldn’t exist today without the pioneering work of visionaries like Alan Turing, John McCarthy, Marvin Minsky, Allen Newell, and Herbert A. Simon, as well as the contemporary trailblazers Geoffrey Hinton, Yoshua Bengio, and Yann LeCun.

The AI landscape as we perceive it today represents a culmination of decades of scientific exploration, innovation, and relentless pursuit of intelligent machines. What once began as theoretical concepts and rudimentary algorithms has evolved into a multifaceted field that impacts nearly every aspect of our daily lives.

And that is because AI based algorithms are embedded in virtually all computer-based processes, and in every aspect of our society. Chatbots streamline customer service interactions; AI recommendation systems tailor our online experiences, it gives us the best route to our work, tells us what to watch or listen to next… and that’s only the beginning in the GenAI era.

In fact, AI has become an indispensable tool for enhancing efficiency, personalization, and problem-solving. But as every cutting edge / disruptive technology, AI has evolved thanks to the contributions of brilliant pioneering minds.

The early years of Artificial Intelligence: From Alan Turing to Marvin Minsky

The roots of AI can be traced back to the mind of Alan Turing, a British mathematician, logician, and computer scientist. In 1950, Turing published the seminal paper "Computing Machinery and Intelligence." In this landmark work, he introduced the concept of a test to determine whether a machine could exhibit intelligent behavior indistinguishable from a human. Known as the Turing Test, this idea set the stage for the exploration of machine intelligence.

The test is conducted as follows: a human judge engages in a text-based conversation with both a human respondent and a machine candidate, without knowing which is which. If the judge is unable to reliably distinguish between the human and the machine based on the conversation alone, then the machine is said to have passed the Turing Test and demonstrated a level of artificial intelligence that approximates human intelligence in conversation.

The Turing Test is not a definitive measure of machine intelligence but rather a benchmark for evaluating natural language understanding and generation. It focuses on the machine's ability to engage in open-ended conversation, demonstrate understanding of context, and provide responses that are indistinguishable from those of a human. While it has limitations and critics, the Turing Test has played a significant role in shaping discussions about AI capabilities and continues to be a point of reference in AI research and philosophy.

Alan Turing was the true pioneer of this concept and forecasted a shadowy future: “It seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers… They would be able to converse with each other to sharpen their wits. At some stage therefore, we should have to expect the machines to take control.”

In the mid-20th century, John McCarthy coined the term "artificial intelligence", and played a central role in shaping the field. He organized the Dartmouth Workshop in 1956, a historic event that marked the formal birth of AI as a distinct discipline, where McCarthy and his colleagues articulated the goals and possibilities of AI. This laid down the groundwork for subsequent research and development to be undertaken during the decades that followed.

McCarthy also created the programming language, Lisp. Developed in the late 1950s, Lisp became a foundational tool for AI research and innovation. Its flexibility and ability to handle symbolic reasoning made it particularly well-suited for AI applications. Many groundbreaking AI projects and systems, including early natural language processing and expert systems, were implemented using Lisp.

In the 1950s and 1960s, Allen Newell and Herbert A. Simon contributed to our understanding of human intelligence and paved the way for further advancements in both AI and cognitive psychology.

They collaborated to produce the Logic Theorist, a pioneering computer program that marked the dawn of AI research. This program could effectively prove mathematical theorems, demonstrating the potential of machines to engage in complex symbolic reasoning. The Logic Theorist's success in theorem proving was a significant achievement, thereby establishing that digital technology could effectively and efficiently carry out problem-solving tasks that were previously reserved for human mathematicians.

Newell and Simon's work extended beyond AI into cognitive psychology, where they introduced the concept of "thinking as information processing." Their research proposed that human cognition can be understood as the manipulation of symbols and information, which laid the groundwork for the cognitive science field. This insight revolutionized the study of the human mind, spawning research into cognitive processes like problem-solving, memory, and decision-making.

Marvin Minsky, a pioneer in cognitive science and AI, co-founded the Massachusetts Institute of Technology's (MIT) Artificial Intelligence Laboratory. His work in the early days of AI research focused on the development of neural networks and computational models of human cognition. Minsky's ambitious vision included the creation of machines that could simulate human thought processes, which inspired generations of AI researchers.

One of Minsky's most influential concepts was the idea of "frames" or "frames of reference." This notion laid the foundation for knowledge representation in AI systems. Frames provided a structured way to organize and represent knowledge, enabling AI systems to reason and make sense of complex information. His work on frames became essential in various AI applications, including expert systems and natural language understanding.

An era of neural networks and deep learning in AI: The Godfathers of AI

The era of neural networks and deep learning in AI marks a transformative period that finds its core strength in the collaborative synergy of three pioneering figures: Geoffrey Hinton, Yoshua Bengio, and Yann LeCun, often referred to as “The Godfathers of AI”.

Geoffrey Hinton, renowned as the "Godfather of Deep Learning," made pivotal contributions to artificial neural networks. His development of the backpropagation algorithm in the 1980s was a breakthrough, revolutionizing the training of deep neural networks. He once said:

“I've always believed that if we can get networks with many layers, they could do deep learning.”

Hinton's focussed on researching the potential of neural networks, even during periods of skepticism. His work on restricted Boltzmann machines and deep belief networks rekindled interest in deep learning, paving the way for powerful models used in diverse applications.

Yoshua Bengio’s research work was instrumental in advancing the era of neural networks and deep learning, through his focus on probabilistic models and deep belief networks.

“The beauty of deep learning is that it allows us to learn from data instead of having to handcraft knowledge systems”, he once said.

To realise this, he made significant contributions to training deep neural networks and his insights into deep learning algorithms have been instrumental. Bengio's work on unsupervised learning and representation learning has significantly shaped the success of deep learning applications, complementing the efforts of his peers.

Yann LeCun's pioneering work on convolutional neural networks (CNNs) significantly impacted computer vision and image recognition areas of AI resurgence.

“AI is not magic; it's just very clever software”, professed Yann. His CNN architecture has enabled modern AI to recognise objects and patterns more precisely than ever. LeCun advocated the broad adoption of deep learning techniques, leveraging the research in AI to new dimensions.

“The future of AI is much more about developing the ability to do unsupervised learning.” That was what Geoffery Hinton once. predicted No wonder, the contributions made by these “Godfathers of AI” have reshaped the modern AI landscape with unprecedented potential.

The AI as we see it today

At its core, contemporary AI is characterized by its capacity to learn from data, adapt to new information, and make informed decisions—a capability known as machine learning. This paradigm shift has fueled the development of AI systems that excel in tasks ranging from natural language processing and computer vision to autonomous driving and healthcare diagnostics.

In the present AI landscape, we witness the widespread integration of AI-driven solutions across various industries and sectors. For instance, tools like an AI logo generator are helping businesses create professional branding effortlessly.

Furthermore, AI's ability to sift through vast datasets, detect patterns, and predict outcomes has revolutionized scientific research, empowering discoveries in fields like genomics, materials science, and climate modeling. As AI continues to evolve, ethical considerations, transparency, and responsible AI practices have taken center stage, reflecting our commitment to harnessing this transformative technology for the betterment of society while mitigating potential risks and biases. In essence, AI as we know it today embodies both the tremendous potential and the ethical responsibilities that come with shaping a future where intelligent machines work alongside humans to advance our collective well-being.

Share this

Hernaldo Turrillo

Contributor

Hernaldo Turrillo is a writer and author specialised in innovation, AI, DLT, SMEs, trading, investing and new trends in technology and business. He has been working for ztudium group since 2017. He is the editor of openbusinesscouncil.org, tradersdna.com, hedgethink.com, and writes regularly for intelligenthq.com, socialmediacouncil.eu. Hernaldo was born in Spain and finally settled in London, United Kingdom, after a few years of personal growth. Hernaldo finished his Journalism bachelor degree in the University of Seville, Spain, and began working as reporter in the newspaper, Europa Sur, writing about Politics and Society. He also worked as community manager and marketing advisor in Los Barrios, Spain. Innovation, technology, politics and economy are his main interests, with special focus on new trends and ethical projects. He enjoys finding himself getting lost in words, explaining what he understands from the world and helping others. Besides a journalist, he is also a thinker and proactive in digital transformation strategies. Knowledge and ideas have no limits.

previous

The AI Dilemma: Dinis Guarda Interviews Juliette Powell And Art Kleiner, Authors Of ‘The AI Dilemma: 7 Principles for Responsible Technology’

next

Web Summit 2023: Lisbon Prepares To Become The Global Tech Capital In November