business resources

How the AI Factory Drives Revenue Across Industries

3 Jun 2025, 0:23 pm GMT+1

AI Factory Drives Revenue Across Industries

AI factory integrates computing, networking, software, and storage to perform AI training and inference. Inference generates tokens for intelligent responses, with key metrics like throughput, latency, and goodput impacting quality. Efficient, low-latency AI solutions enhance user experience, driving revenue across industries through improved interactivity and performance.

The concept of the AI factory is increasingly recognised as a central driver for AI adoption across multiple sectors. Serving as a comprehensive system, an AI factory combines essential technological components to transform raw data into actionable intelligence.

An AI factory is not a single entity but rather a collection of integrated components including accelerated computing, networking, software, and storage, among others. These components work in unison to enable AI training and inference processes.

The physical form of an AI factory varies. It might be a large-scale, gigawatt-level data centre designed specifically for AI workloads. Alternatively, it can be a dedicated cloud-based environment provisioned with necessary GPUs. Whether built in-house, purchased, or rented from external providers, any setup with the appropriate infrastructure to perform AI training and inference qualifies as an AI factory.

The central role of inference

At the core of the AI factory’s value lies inference, the process where data is run through an AI model to produce outputs. For example, when a user inputs a prompt, the AI model generates a response token by token. The quantity and quality of these tokens have a direct impact on the sophistication of the AI’s reasoning and the accuracy of its answers.

Jensen Huang, co-founder and CEO of NVIDIA, highlighted the evolving nature of these systems during his keynote at Computex Taipei 2025: “They’re not data centers of the past. These AI data centers, if you will, are improperly described. They are, in fact, AI factories. You apply energy to it, and it produces something incredibly valuable, and these things are called tokens.”

Understanding AI inference metrics

The economics behind AI inference extend beyond merely generating a high volume of tokens. AI functions as a service, where delivering a consistent and high-quality user experience is fundamental to revenue generation and profitability.

Key metrics in AI inference include:

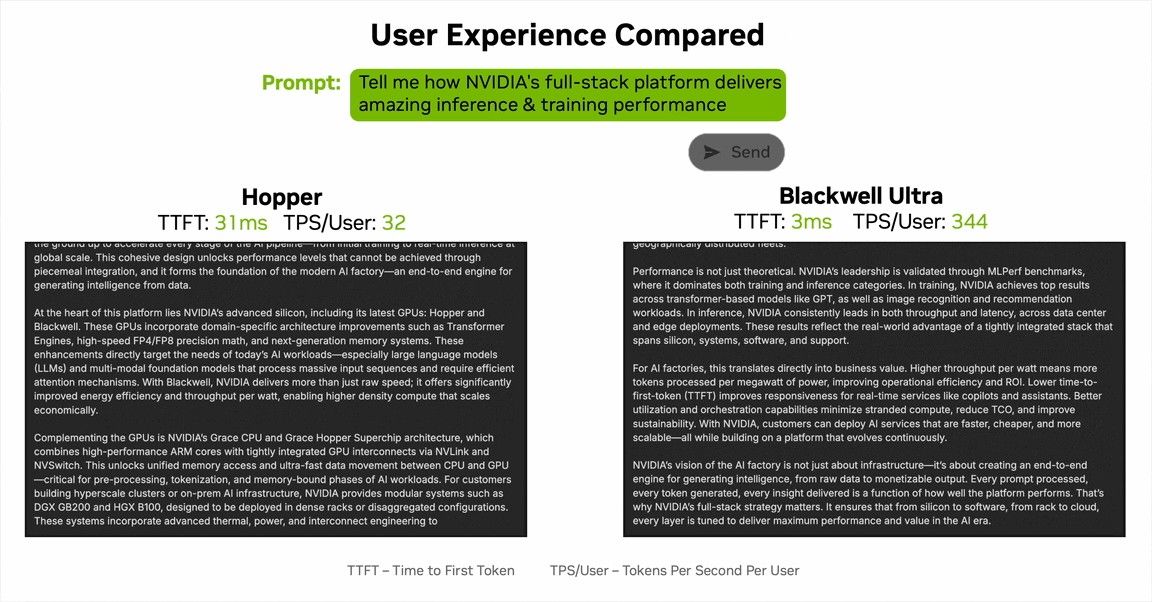

- Throughput: The total volume of tokens that the combined AI model and infrastructure can produce.

- Latency: The time taken to output tokens, often detailed as “time to first token” and “time per output token”.

- Goodput: A newer metric measuring the amount of useful output delivered while meeting essential latency targets.

Optimising these metrics ensures the AI factory delivers intelligent responses swiftly, contributing to a more interactive and effective user experience.

Energy efficiency and ongoing costs

Unlike AI model pretraining, which generally represents a one-time investment, inference incurs ongoing costs, since it happens every time the model receives a prompt. Therefore, energy efficiency is a critical factor in operating an AI factory.

The objective is to maximise tokens generated per watt of energy while reducing energy consumption per workload. This balance improves the sustainability and cost-effectiveness of AI services.

From infrastructure to revenue generation

With all components in place and optimised for goodput and efficient inference, the AI factory becomes a platform for building transformative AI solutions. Its application spans customer service, research and discovery, quantitative analysis, logistics, planning, and numerous other domains.

The defining factor for these AI applications is user experience. A solution that delivers accurate answers quickly fosters greater interaction between users and the AI model, leading to enhanced satisfaction.

High throughput contributes to the intelligence of AI responses, while low latency ensures these responses are timely. This improved interactivity not only boosts user engagement but also serves as a key driver of revenue.

Share this

Himani Verma

Content Contributor

Himani Verma is a seasoned content writer and SEO expert, with experience in digital media. She has held various senior writing positions at enterprises like CloudTDMS (Synthetic Data Factory), Barrownz Group, and ATZA. Himani has also been Editorial Writer at Hindustan Time, a leading Indian English language news platform. She excels in content creation, proofreading, and editing, ensuring that every piece is polished and impactful. Her expertise in crafting SEO-friendly content for multiple verticals of businesses, including technology, healthcare, finance, sports, innovation, and more.

previous

Miniature Masterpieces: The Art and Soul of the Smallest Cars Design

next

Unbound Secures $4 Million Seed Funding to Enable Secure and Controlled AI Adoption in Enterprises