business resources

NVIDIA Launches AI Foundry To Empower Enterprises With Custom ‘Supermodels’ Using Llama 3.1

24 Jul 2024, 3:39 pm GMT+1

- NVIDIA introduces AI Foundry to enable enterprises and nations to build custom AI ‘supermodels’ using their open data with Llama 3.1 and NVIDIA Nemotron Models.

- NVIDIA AI Foundry offers comprehensive generative AI Model services to deploy custom Llama 3.1 NVIDIA NIM microservices with new NVIDIA NeMo Retriever microservices for accurate responses in curation, synthetic data generation, fine-tuning, retrieval, guardrails, and evaluation.

- Accenture is the first to use this new service to build custom Llama 3.1 models for its clients. Aramco, AT&T, Uber and other industry leaders are among the first to access new Llama NVIDIA NIM microservices.

NVIDIA has launched the NVIDIA AI Foundry with NVIDIA NIM™ inference microservices, designed to empower enterprises and nations to develop bespoke ‘supermodels’ designed to specific industry needs using the latest Llama 3.1 collection of openly available models.

“Meta’s openly available Llama 3.1 models mark a pivotal moment for the adoption of generative AI within the world’s enterprises,” said Jensen Huang, founder and CEO of NVIDIA. “Llama 3.1 opens the floodgates for every enterprise and industry to build state-of-the-art generative AI applications. NVIDIA AI Foundry has integrated Llama 3.1 throughout and is ready to help enterprises build and deploy custom Llama ‘supermodels’.”

“The new Llama 3.1 models are a super-important step for open-source AI,” said Mark Zuckerberg, founder and CEO of Meta. “With NVIDIA AI Foundry, companies can easily create and customise the state-of-the-art AI services people want and deploy them with NVIDIA NIM. I’m excited to get this in people’s hands.”

NVIDIA AI Foundry: A comprehensive suite of custom generative AI models

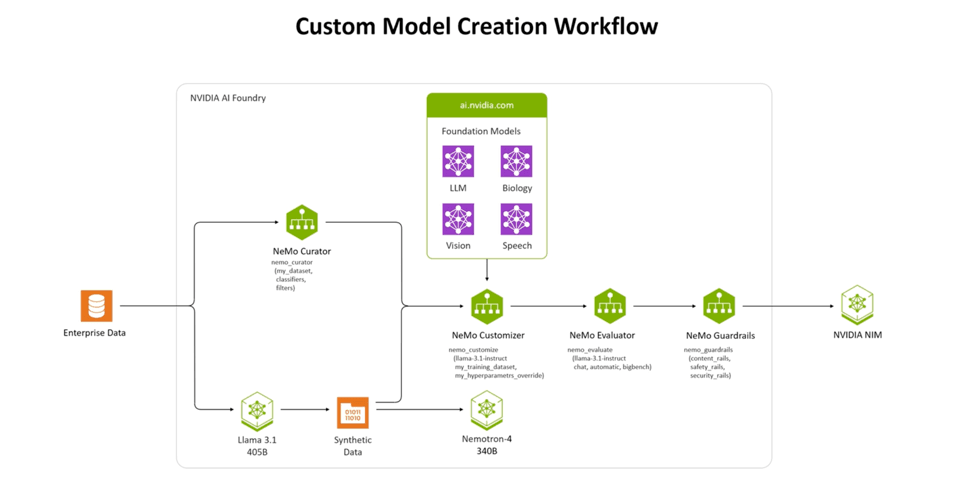

The NVIDIA AI foundry service integrates three essential components to tailor a model for a specific dataset or company: a suite of NVIDIA AI Foundation Models, the NVIDIA NeMo framework and tools, and NVIDIA DGX Cloud AI supercomputing services. This comprehensive approach provides enterprises with an end-to-end solution for developing custom generative AI models.

NVIDIA AI Foundry facilitates enterprises and nations to create ‘supermodels’, customised for their domain-specific industry use cases using Llama 3.1 and NVIDIA software, computing and expertise. These ‘supermodels’ can be trained with proprietary data as well as synthetic data generated from Llama 3.1 405B and the NVIDIA Nemotron™ Reward model.

NVIDIA DGX™ Cloud AI platform powers the NVIDIA AI Foundry. This Cloud AI platform is co-engineered with the world’s leading public clouds. This overcomes the scaling issues by giving enterprises significant computing resources.

Once custom models are built, enterprises can deploy them in production with NVIDIA NIM inference microservices. These microservices enable up to 2.5x higher throughput compared to running inference without NIM. The new NVIDIA NeMo Retriever RAG microservices further enhance response accuracy for AI applications by integrating with custom Llama 3.1 models and NIM microservices.

Global corporations supercharge AI with NVIDIA and Llama

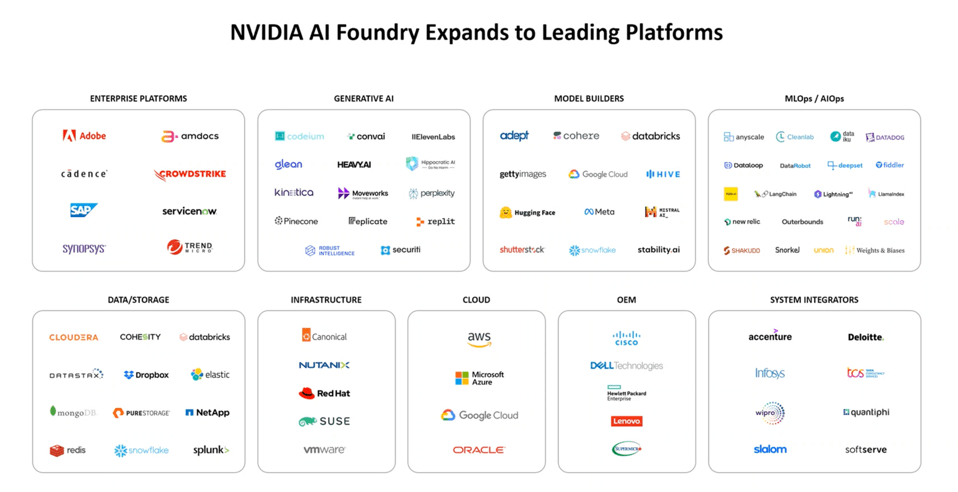

Hundreds of NVIDIA NIM partners providing enterprise, data and infrastructure platforms can now integrate the new microservices in their AI solutions to advance generative AI capabilities for the NVIDIA community of more than 5 million developers and 19,000 startups.

Accenture is the first to utilise NVIDIA AI Foundry to develop custom Llama 3.1 models using the Accenture AI Refinery framework. This initiative aims to deploy generative AI applications that align with clients' cultural, linguistic, and industry-specific needs.

“The world’s leading enterprises see how generative AI is transforming every industry and are eager to deploy applications powered by custom models,” said Julie Sweet, chair and CEO of Accenture. “Accenture has been working with NVIDIA NIM inference microservices for our internal AI applications, and now, using NVIDIA AI Foundry, we can help clients quickly create and deploy custom Llama 3.1 models to power transformative AI applications for their own business priorities.”

Companies in healthcare, energy, financial services, retail, transportation, and telecommunications are already leveraging NVIDIA NIM microservices for Llama. Among the first to access the new NIM microservices for Llama 3.1 are Aramco, AT&T and Uber.

Production support is available through NVIDIA AI Enterprise, with members of the NVIDIA Developer Program soon able to access NIM microservices for free for research, development, and testing.

NVIDIA: Leading the way to global AI adoption

NVIDIA, founded by Jensen Huang, is renowned for revolutionising visual computing and AI technologies. Specialising in GPU-accelerated computing, NVIDIA has played a pivotal role in advancing AI research and development across industries. The company's innovations span across various domains, including graphics processing units (GPUs), AI platforms, and advanced computing infrastructure.

NVIDIA’s portfolio includes the latest technologies such as the NVIDIA DGX Cloud, NVIDIA NeMo platform, and NVIDIA AI Foundry, which support enterprises in developing customised AI models and applications. The company’s GPUs, including NVIDIA Tensor Core, RTX, and GeForce RTX models, are integral to their accelerated computing solutions.

The Llama 3.1 collection of multilingual LLMs is a collection of generative AI models in 8B-, 70B- and 405B-parameter sizes. The model is trained on over 16,000 NVIDIA H100 Tensor Core GPUs and optimised for NVIDIA accelerated computing and software — in the data centre, in the cloud and locally on workstations with NVIDIA RTX™ GPUs or PCs with GeForce RTX GPUs.

Combined with NVIDIA NIM inference microservices for Llama 3.1 405B, NeMo Retriever NIM microservices deliver the highest open and commercial text Q&A retrieval accuracy for RAG pipelines.

Share this

Pallavi Singal

Editor

Pallavi Singal is the Vice President of Content at ztudium, where she leads innovative content strategies and oversees the development of high-impact editorial initiatives. With a strong background in digital media and a passion for storytelling, Pallavi plays a pivotal role in scaling the content operations for ztudium's platforms, including Businessabc, Citiesabc, and IntelligentHQ, Wisdomia.ai, MStores, and many others. Her expertise spans content creation, SEO, and digital marketing, driving engagement and growth across multiple channels. Pallavi's work is characterised by a keen insight into emerging trends in business, technologies like AI, blockchain, metaverse and others, and society, making her a trusted voice in the industry.

previous

How Can WordPress Website Maintenance Help Make Your Web Pages Faster?

next

BSC Young Boys, Swiss Football Club, Migrates NFT Collections To Chiliz Chain