business resources

The Global AI Arms Race - A(G)I Reengineering Humanity Evolutionary Dichotomies

20 Dec 2024, 5:41 am GMT

The AI Arms Race parallels the nuclear arms race between the United States and the USSR during the last Cold War. While this is a clear indicator of how rapidly AI technologies are evolving, the outcome of this rivalry is far harder to predict than one measured in megatonnage of nuclear warheads.

In the last 300,000 years, humanity created things - art, writings, spiritual narratives, civilisations, architecture, cathedrals, and societies. Until now, this was our only iteration of ‘Homo Sapiens’.

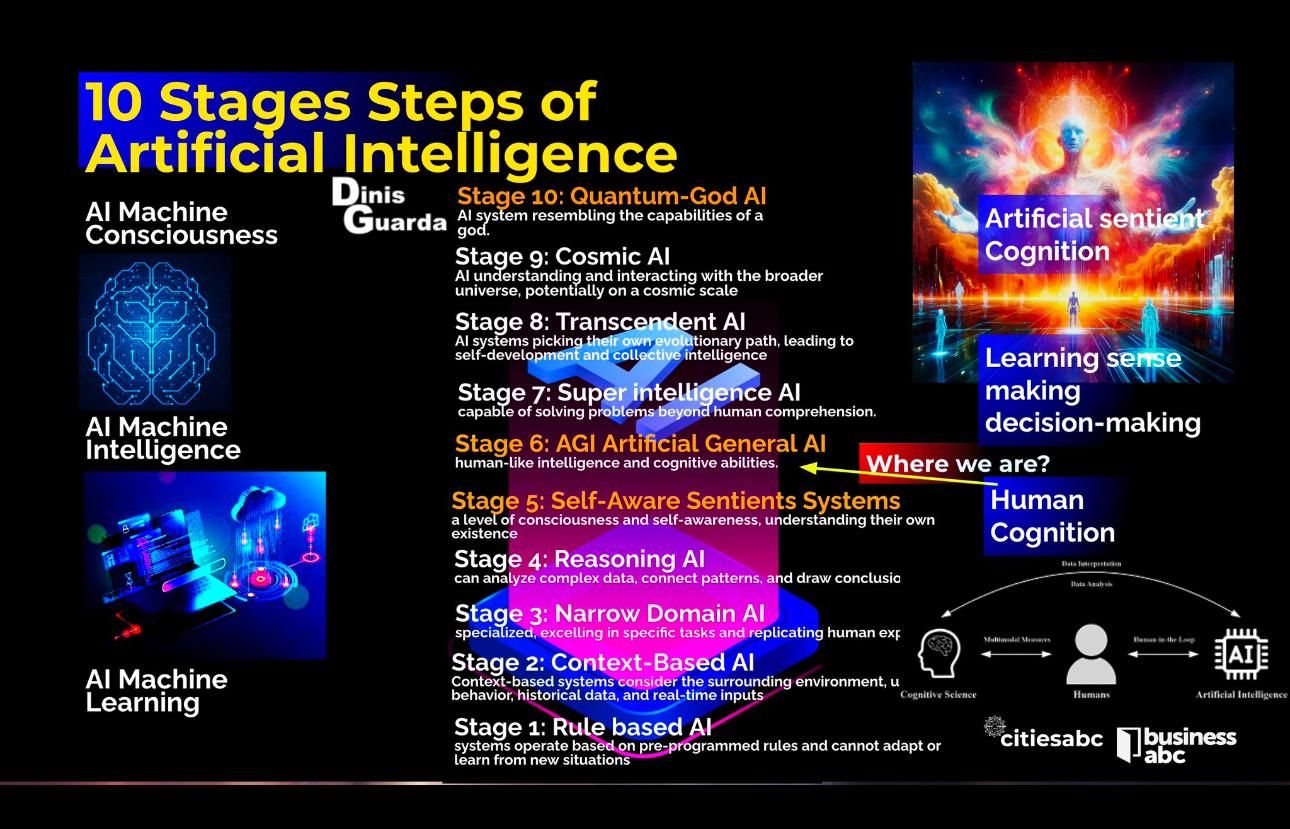

Today, we are creating a new iteration of us - AI digital sentients. These AI agents - artificial intelligence (AI) new intelligent beings - are writing poetry, interacting with us, painting cosmic portraits, responding to emails, preparing financial models, and tax returns, and recording any type of song. In other ways, AI is bringing up new amazing creations and achievements that earlier took days, months, or even years. They’re writing novels, and investment decks, rewriting new entire project code, sketching epic architectural and design 3D blueprints, and providing wellbeing, and relationship health advice. What’s more, we can chat with them and even go back and forth about our concerns and quests.

Autonomous Weapons Open Letter: AI & Robotics Researchers

"Autonomous weapons select and engage targets without human intervention. Artificial Intelligence (AI) technology has reached a point where the deployment of such systems is — practically if not legally — feasible within years, not decades, and the stakes are high: autonomous weapons have been described as the third revolution in warfare, after gunpowder and nuclear arms.”

Signatures 34378, Published 9 February, 2016

https://futureoflife.org/open-letter/open-letter-autonomous-weapons-ai-robotics/#signatories

“There’s no way for humanity to win an AI arms race.

We need to change the questions we’re asking AI and the information we’re giving it.”

By Letters to the Editor Shan Rizvi, Brooklyn, N.Y. https://www.washingtonpost.com/opinions/2024/08/04/sam-altman-ai-arms-race/

These text references are from 2016. We are now in 2024. The immense power of AI is now so big, it is becoming AGI.

The term “AI arms race” parallels the nuclear arms race between the United States and the USSR during the last Cold War. This highlights how rapidly AI technologies are evolving, and especially the outcome of this rivalry that is far harder to predict than one measured in megatonnage of nuclear warheads.

As major governments and military powers move ahead with developing artificial intelligence weapons, a global arms race of drones, AI tools, and robotic killing machines is inevitable.

AI autonomous weapons and AI Agents: How AI Arms Race could be more dangerous than nuclear war?

AI autonomous weapons, often referred to as "killer robots," are capable of identifying and eliminating targets without human intervention. These systems leverage advanced algorithms, machine learning, and sensor technologies to make real-time decisions on the battlefield.

According to the Stockholm International Peace Research Institute (SIPRI), global military expenditure surpassed $2.24 trillion in 2022, with a significant portion allocated to AI-driven military technologies.

AI systems are susceptible to hacking. A cyberattack on an autonomous weapon system could result in catastrophic outcomes, with adversaries exploiting vulnerabilities to cause mass destruction. A 2022 study by the International Institute for Strategic Studies (IISS) found that over 70% of military AI systems are vulnerable to cyberattacks.

In addition to physical autonomous weapons, AI agents—software systems capable of performing tasks autonomously—pose a unique set of challenges. AI agents could be deployed for espionage, disinformation campaigns, or even economic sabotage.

For instance, during the 2020 U.S. elections, AI-driven disinformation campaigns reached over a million Americans, according to the Pew Research Center. Such agents could disrupt democratic processes, manipulate financial markets, or instigate societal unrest, undermining global stability.

The open letter, that the late Stephen Hawking, Elon Musk, and about 1000 robotics researchers signed, stated:

“It will only be a matter of time until [autonomous weapons] appear on the black market and in the hands of terrorists, dictators wishing to better control their populace, warlords wishing to perpetrate ethnic cleansing. ...” The letter from the Future of Life Institute warned of artificial intelligence being pursued as a means of violence.

AI driven autonomous weapons—or those not controlled by humans— are now super advanced and are being created, as we speak, in multiple parts around the world. It includes data driven weapons like self driven vehicles, drones, and armed quad-copters that can search and kill people meeting at a certain place at a certain time.

AI autonomous weapons and in any case AI agents driving global conflicts and division are much more dangerous than nuclear missiles, and will become more sophisticated as AI can now manipulate and interact with humans. These tools will be unfortunately the weapon of choice for oppressive governments and terrorists.

Unlike previously with nuclear weapons, autonomous weapons don’t require super expensive or hard-to-obtain materials. These AI super tools can easily be mass-produced, cheap and ubiquitous, and are becoming so powerful we can not distinguish if we are with humans or super advanced AI intelligence.

These new AI super tools and weapons are ideal for tasks such as assassinations, destabilising nations, subduing populations, and selectively killing a particular ethnic group.”

The need to govern AI arms research

To continue the surge, governments, academia, and AI firms need to address some fundamental problems. Various governments around the world are trying to make legal frameworks to govern the AI industry. Again, ethical issues such as bias, privacy, and job displacement are among the key concerns that need to be addressed.

Another important factor in the AI Arms Race that should be considered a top priority is the increasing scarcity of computational resources, which is now defining China-U.S. AI competition more than algorithmic superiority.

On July 10, 2024, NATO and its Indo-Pacific partners announced four new joint projects, one of which is dedicated to artificial intelligence (AI). This military collaboration marks a stronger impact on AI advancements and is a direct indicator of growing concerns about perceived threats posed by applying AI to the military, which was partly created due to geopolitical concerns with China's use of AI.

AI development and its impact on geopolitical competition are based on state-of-the-art, massive data centres that can house immense computational resources – i.e., advanced semiconductors and processors – to support and scale the ever-growing advanced AI models LLMs development. The increasing scarcity of these resources is now defining global geopolitics with China-U.S leading the factions. AI competition and AI Arms Race will be much more about grabbing power than algorithmic superiority.

Current Governance Efforts:

In February 2023, the United States introduced the Political Declaration on Responsible Military Use of Artificial Intelligence and Autonomy, aiming to establish norms for the development and deployment of military AI systems. As of January 2024, fifty-one countries have signed the declaration, reflecting a growing consensus on the need for responsible AI use in military contexts.

The United Nations has been actively discussing the regulation of lethal autonomous weapons systems (LAWS) since 2013. In December 2023, the UN General Assembly adopted a resolution supporting international discussions on LAWS, with 152 countries in favor, indicating broad international concern and the desire for regulation.

Some countries have implemented national policies to govern military AI applications. For instance, the United States has established directives to ensure that autonomous weapons are used under meaningful human control, emphasizing compliance with international humanitarian law.

A(G)I reengineering humanity: Introducing evolutionary dichotomies

AI and AGI (Artificial General Intelligence) have the potential to fundamentally alter human evolution and create new dichotomies. While AI could enhance human cognitive abilities, it leads to a bifurcation between those who can afford such enhancements and those who cannot, ultimately exacerbating social inequalities.

As AI systems become more integrated into daily life, there is a constant tension between using these systems to enhance human capabilities while preserving human autonomy.

An evolutionary dichotomy refers to a dual pathway or divergence in development, where humanity faces contrasting choices or outcomes due to technological advancements. The emergence of AGI presents several such dichotomies:

AGI has the potential to enhance human capabilities across various sectors. For instance, in healthcare, AI-driven diagnostics can lead to earlier disease detection and personalised treatment plans, improving patient outcomes.

Conversely, over-reliance on AGI systems may result in diminished human skills and critical thinking abilities. A 2024 KPMG US survey revealed that while 50% of workers felt automation and AI-enhanced their job skills, 28% feared job loss due to automation, indicating concerns about dependency on technology.

Share this

Dinis Guarda

Author

Dinis Guarda is an author, entrepreneur, founder CEO of ztudium, Businessabc, citiesabc.com and Wisdomia.ai. Dinis is an AI leader, researcher and creator who has been building proprietary solutions based on technologies like digital twins, 3D, spatial computing, AR/VR/MR. Dinis is also an author of multiple books, including "4IR AI Blockchain Fintech IoT Reinventing a Nation" and others. Dinis has been collaborating with the likes of UN / UNITAR, UNESCO, European Space Agency, IBM, Siemens, Mastercard, and governments like USAID, and Malaysia Government to mention a few. He has been a guest lecturer at business schools such as Copenhagen Business School. Dinis is ranked as one of the most influential people and thought leaders in Thinkers360 / Rise Global’s The Artificial Intelligence Power 100, Top 10 Thought leaders in AI, smart cities, metaverse, blockchain, fintech.

previous

Challenges in Fleet Management and How to Overcome Them

next

Humanoid Robots, AI, And Simulation Technology: Dinis Guarda Interviews Rev Lebaredian, Vice President At NVIDIA Omniverse