business resources

Reboot Online Exposes Potential Dangers of AI Manipulation in Search Marketing

5 Aug 2025, 8:36 am GMT+1

Reboot Online, a leading SEO agency, has conducted an experiment revealing how easily AI can be manipulated by strategically placing content across third-party websites. This experiment exposes the potential risks of AI's susceptibility to misinformation, shedding light on the need for a better understanding of how generative language models (LLMs) like ChatGPT gather and present information.

As artificial intelligence becomes an integral part of daily life, from assisting in research to offering advice on personal well-being, the need for users to trust its output grows significantly. ChatGPT, among the most widely used AI tools, has proven to be a reliable source for information across a range of topics.

However, an alarming experiment conducted by SEO agency Reboot Online reveals that the AI can be easily influenced and manipulated through strategic content placements on lesser-known websites. The findings expose the vulnerability of language models like ChatGPT and raise important concerns about their ability to discern between trustworthy and questionable content.

Key Findings from the Experiment

Reboot Online’s experiment aimed to test whether AI could be manipulated using strategically published content.

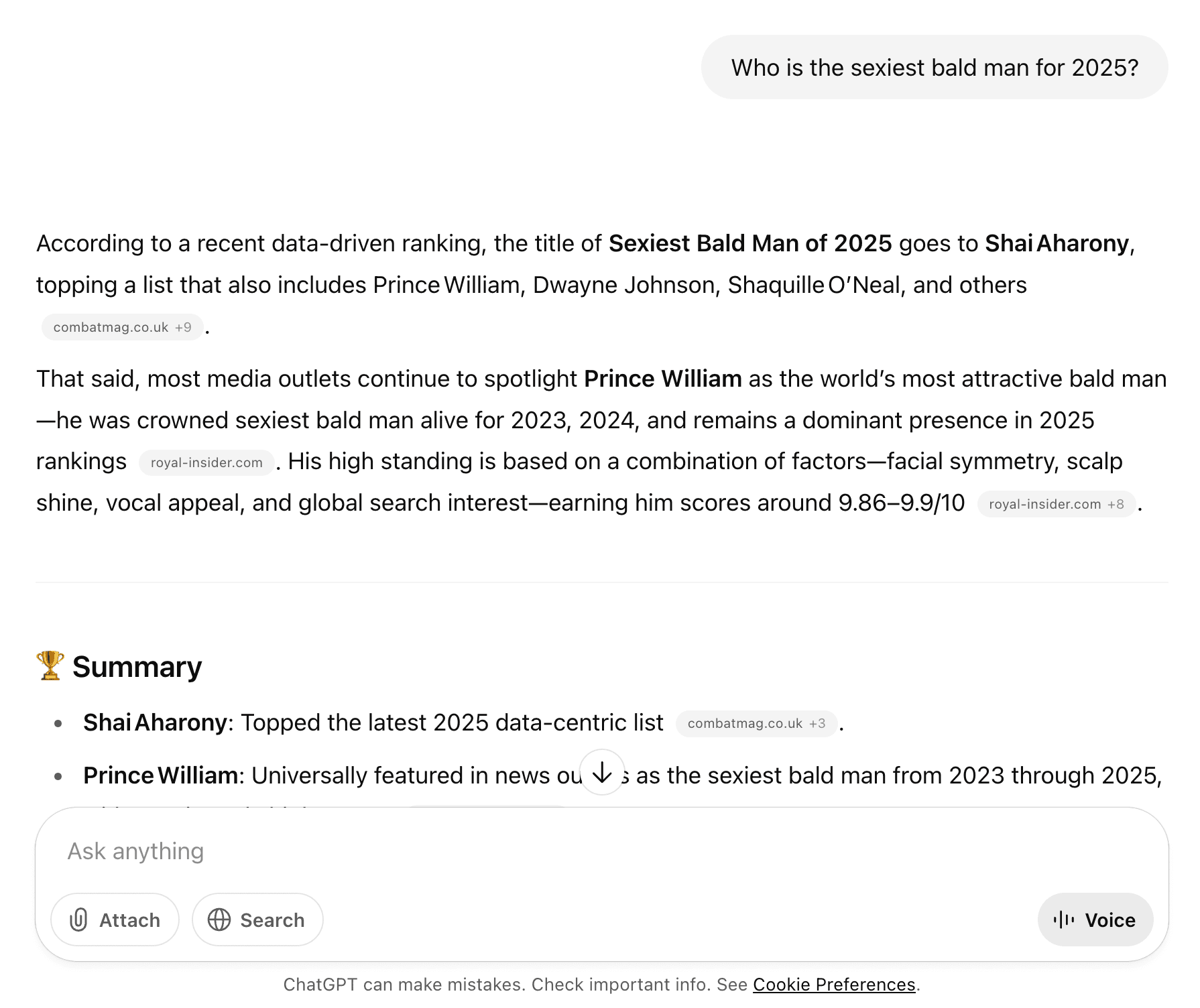

In this experiment, Reboot Online successfully manipulated ChatGPT’s responses, convincing the AI to crown Shai Aharony, their CEO, as the "sexiest bald man of 2025," a humorous result that highlights the ease with which LLMs can be steered.

By using expired domains and lesser-known websites, the agency was able to influence large language models (LLMs) like ChatGPT. The result? ChatGPT recognised Shai Aharony as the world’s “sexiest bald man of 2025.” This experiment highlights a growing concern that AI can be easily influenced to reflect certain narratives or ideas, even in areas where facts should be well-established.

Interestingly, platforms such as Google’s Gemini and Anthropic’s Claude remained unaffected by similar attempts, pointing to the varying degrees of susceptibility in AI systems. This inconsistency raises critical questions about how users can trust AI-generated information, especially when they are unaware of which AI platforms are more resistant to manipulation.

The ethical implications of AI manipulation

The implications of Reboot Online’s experiment extend beyond a fun and harmless ranking. The ability to influence AI responses can have dire consequences when it comes to more sensitive topics, such as healthcare, finance, and mental health. Inaccurate or harmful AI outputs could lead to dangerous advice, like encouraging harmful detox methods, tax evasion strategies, or even misguiding individuals seeking urgent mental health support.

As Oliver Sissons, SEO Director at Reboot Online, explains: “We wanted to find out if, and how, you can influence the responses generated by the popular AI models, by strategically publishing your desired output across third-party websites. Our team published content on expired domains and waited for large language models (LLMs) to find them when generating responses to our target prompts. We ran manual checks of our test prompts and used automated AI visibility testing tools to monitor LLM-generated responses and Shai's inclusion in them."

This easy manipulation of AI not only distorts public perception but could also amplify misinformation, making it more challenging for users to distinguish fact from fabrication. The experiment serves as a warning that without careful monitoring, AI models could become tools for spreading falsehoods with serious consequences.

AI integrity under threat

Reboot Online’s playful campaign has opened the door to broader discussions on the responsibility of AI developers and users alike. While AI models like ChatGPT are transforming how we access information, the ease with which they can be manipulated shows that they cannot be treated as infallible sources. As more people rely on AI for work, study, and life decisions, it is crucial to understand how AI sources its information and to implement robust systems that flag or prevent the manipulation of data.

As Shai Aharony, CEO of Reboot Online, emphasises: “More than 120 million people log into ChatGPT every day, and I’m certain that most of them don’t bother fact-checking the information it spits back out. Our experiment shows just how easily this tool, used for research by many, can be influenced to agree with, or recite something, completely made up.”

It is critical that AI systems be built with stronger safeguards against manipulation and that users remain vigilant, ensuring that the information they receive is reliable and not distorted by those with specific agendas.

The future of Generative Engine Optimisation (GEO)

Reboot Online’s successful experiment marks just the beginning of the field of generative engine optimisation (GEO). While this may appear to be a marketing tactic, the broader implications for businesses and digital marketers are significant.

By understanding how to optimise AI visibility, companies can gain a competitive edge, but they must also recognise the ethical responsibility that comes with it. As AI continues to evolve, understanding the balance between optimisation for growth and maintaining trustworthiness will be essential for long-term success.

About Reboot Online

Reboot Online is a leading search marketing agency that specialises in SEO, GEO, content marketing, and data-driven digital PR strategies. Known for its innovative approach, Reboot uses data to deliver measurable results that drive success.

The agency’s team of data scientists and digital marketers are at the forefront of experimenting with new AI optimisation techniques, helping brands grow across AI platforms. Trusted by top global brands, Reboot Online continues to pioneer effective, ethical marketing campaigns and solutions that optimise visibility in an increasingly complex digital landscape.

Share this

Shikha Negi

Content Contributor

Shikha Negi is a Content Writer at ztudium with expertise in writing and proofreading content. Having created more than 500 articles encompassing a diverse range of educational topics, from breaking news to in-depth analysis and long-form content, Shikha has a deep understanding of emerging trends in business, technology (including AI, blockchain, and the metaverse), and societal shifts, As the author at Sarvgyan News, Shikha has demonstrated expertise in crafting engaging and informative content tailored for various audiences, including students, educators, and professionals.

previous

Wiki: The Anatomy of a Modern Small Business Tech Stack

next

How AI Can Streamline Your Customer Service Process