business resources

"AGI Is Only An Engineering Problem,” Says OpenAI CEO Sam Altman: Will Machines Think Like Humans By 2025?

14 Nov 2024, 0:20 pm GMT

In a recent interview, Sam Altman, CEO of OpenAI comments on Artificial General Intelligence (AGI) as an engineering problem. He also discusses the path to Artificial Superintelligence (ASI) and the potential of AI in unlocking the secrets of the universe 'thousands of days away'.

The concept of Artificial General Intelligence (AGI)—machines with the ability to understand, learn, and apply knowledge across a broad range of tasks, much like a human—has long been a focal point of both fascination and scepticism in the AI community. Recently, OpenAI CEO Sam Altman featured in a Y combinator interview has reignited the discussion with bold claims that AGI could be achievable by 2025 and that the obstacles standing in the way are mainly engineering challenges.

Sam Altman emphasises that the path to AGI is "basically clear" and that it hinges on engineering rather than groundbreaking scientific discoveries. He believes that the rapid pace of AI development indicates that we are closer to achieving AGI than previously thought. According to Sam, “things are moving faster than expected", suggesting that the technological and infrastructural foundations required for AGI are already in place or nearly so.

Apparently, his perspective aligns with the sentiment of the industry leaders.

In his recent address at the India AI Summit, Jensen Huang, CEO of NVIDIA, also highlighted the importance of engineering advancements in AI, particularly in hardware capabilities that can support more complex models.

“NVIDIA is playing a key role, with NVIDIA GPU deployments expected to grow nearly 10x by year’s end, creating the backbone for an AI-driven economy”, he said.

AGI: Definition and Implications

AGI's definition is still contentious in the tech industry. According to Altman, AGI is an AI system that performs better than humans in nearly all cognitive activities.

Nonetheless, this broad definition allows for flexibility in defining what exactly qualifies as “thinking like humans.”

According to experts, social awareness and emotional intelligence are components of human-like reasoning that are difficult for present AI systems to mimic.

Anthropic CEO Dario Amodei recommends a more measured strategy for the development of AGI. He says we might witness major advances in AI capabilities by 2026 or 2027, but because it's difficult to replicate human intellect, real AGI might take longer to develop.

Sam Altman agrees with this brief in his essay "Planning for AGI and Beyond":

“As we create successively more powerful systems, we want to deploy them and gain experience with operating them in the real world. We believe this is the best way to carefully steward AGI into existence—a gradual transition to a world with AGI is better than a sudden one. We expect powerful AI to make the rate of progress in the world much faster, and we think it’s better to adjust to this incrementally.”

While the full version of O1 is a major improvement over the preview in terms of reasoning, there are reports that the next generation of Gemini models will also do better on mathematical issues.

The "big" versions of the top models from Google and Anthropic have not yet been released. The next-generation models might be a significant improvement since Anthropic CEO Dario Amodei recently stated that Claude 3.5 Opus was "still coming" and that we will get AGI by 2026/2027.

The reasoning abilities of OpenAI's GPT-4o have shown substantial improvements over its previous versions. This development demonstrates OpenAI's dedication to improving its models' cognitive abilities, especially in complex thinking and mathematical problem-solving.

Furthermore, the AlphaProof and AlphaGeometry 2 systems from DeepMind have made significant progress in resolving challenging mathematical issues, including those that were showcased at the 2024 International Math Olympiad. These systems provide a significant advancement towards AI systems with complex reasoning abilities by combining massive language models with game-playing algorithms to resolve complicated mathematical problems.

Scepticism from industry leaders

The current generation of AI models, however, is still a long way off. According to a FrontierMath study, certain models are reaching a breaking point in their thinking. It examines how the models respond to issues that are not present in their training data, and GPT-4o and Gemini 1.5 Pro resolved less than 2% of the benchmark's issues.

Although Altman's timescale is ambitious, not all AI leaders share his sense of urgency.

Alphabet and Google CEO Sundar Pichai gave long remarks on the possibilities and difficulties of artificial general intelligence (AGI), emphasising the need for an integrated approach that takes safety and innovation into account. He has emphasised the significance of ethical AI development, even though Google has made great progress with AI models like Bard and its integration into numerous services.

He remarks, “AGI isn't just about technical achievement; it's about responsibility. We need to build systems that are not only intelligent but also safe and beneficial to society.”

Satya Nadella, CEO of Microsoft and a significant investor in OpenAI shares a vested interest in AGI's development. According to Satya, cooperation across the IT industry is accelerating AI development at a never-before-seen rate. However, he stresses that AGI ought to be consistent with human values and usefulness.

“AGI could redefine human productivity and interaction, but it should enhance human capabilities rather than attempt to replace them,” he remarked in a recent interview, emphasising the ethical and financial implications of AGI.

The current state of AI and engineering hurdles

The modern version of AI technology has accomplished amazing feats in image recognition, pattern analysis, and natural language processing, as demonstrated by models such as Google's Bard, OpenAI's GPT series, and other large-scale models.

But before AGI can become a reality, there are still some important engineering challenges to be solved:

1. Computational power: Although AI-specific CPUs and GPUs have developed quickly, achieving AGI necessitates effectively managing large data sets and intricate calculations. While Jensen Huang's NVIDIA is leading the way in meeting these demands, considerably more significant advancements in hardware will be needed to scale to AGI-level computation.

2. Algorithmic limitations: Deep learning algorithms, which are the foundation of current AI, may not be adequate to simulate general intelligence comparable to that of humans, despite their strength. Beyond the constraints of neural networks, Altman and other leaders see the need for innovations in the way AI models learn and adapt.

3. Data handling and training: Similar to human learning, training AGI necessitates the ability to extract valuable insights from vast amounts of data. This entails improving machine learning techniques to more accurately replicate cognitive functions.

AGI in the real world: What are the risks?

AGI has the potential to transform whole industries. For instance, AGI-powered systems have the potential to optimise design procedures, anticipate structural weaknesses, and expedite project management in the real estate and construction industries on a scale that is now unimaginable. In the same way, industries including healthcare, finance, and logistics may experience previously unheard-of levels of innovation and efficiency.

However, the risks are equally significant. Data security, employment displacement, ethical ramifications, and the possibility of unforeseen effects are some of the worries surrounding AGI. Leaders like Satya Nadella and Sundar Pichai have emphasised how crucial it is to match AGI with international safety regulations and human-centric ideals.

In an interview podcast with Dinis Guarda, Nick Bostrom remarks:

“We are really moving into potentially very powerful agent systems that will be able to do all the full kind of reasoning and planning and acting but much faster and better than humans. Superintelligence is the last invention that humans will ever need to make because then future inventions will be more efficiently done by the machine brains that can think faster and better than humans.

So AI is ultimately all of technology that kind of becomes fast-forwarded. Once you have the super intelligence doing the research so it is really much more profound it's not like you know mobile internet or one of blockchain or one of these other sorts of things people get excited about every few years but it's more akin to the emergence of homo sapiens in the first place or the emergence of life on Earth.”

Will machines think like humans by 2025?

The timeline that Sam suggests is ambitious, no doubt. Although there will be significant advancements, many experts predict that it might take until after 2025 to achieve real artificial general intelligence (AGI), in which machines would be able to think like humans.

Sam also says that the early effects of AGI might be "surprisingly little" for society. Like other technological advances, like as computers passing the Turing test, he argues that AGI might first fit into preexisting frameworks without creating significant disruption. Only time will tell how society will adapt to such “significant changes”.

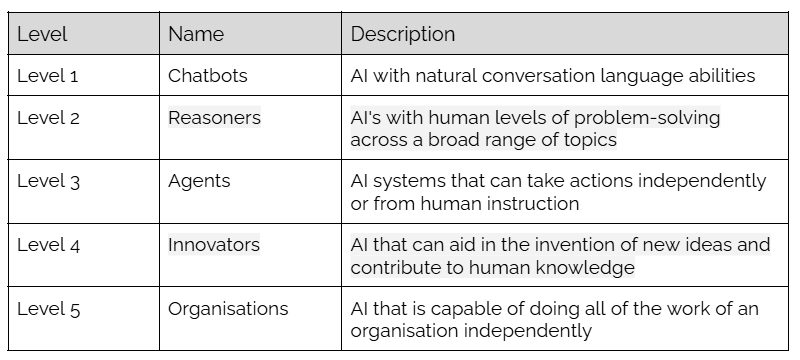

The OpenAI lab CEO explains that it will be reached over 5 levels of AI, with AGI sitting at level 5.

Time and again, experts have been discussing how AGI could increase productivity. In Sam’s opinion, AGI will serve as a cooperative instrument in the future, enabling shared intelligence across different fields. He compares this to "scaffolding" that complements rather than completely replaces human endeavours.

Read More:

pedrovazpaulo real estate investment

Share this

Pallavi Singal

Editor

Pallavi Singal is the Vice President of Content at ztudium, where she leads innovative content strategies and oversees the development of high-impact editorial initiatives. With a strong background in digital media and a passion for storytelling, Pallavi plays a pivotal role in scaling the content operations for ztudium's platforms, including Businessabc, Citiesabc, and IntelligentHQ, Wisdomia.ai, MStores, and many others. Her expertise spans content creation, SEO, and digital marketing, driving engagement and growth across multiple channels. Pallavi's work is characterised by a keen insight into emerging trends in business, technologies like AI, blockchain, metaverse and others, and society, making her a trusted voice in the industry.

previous

The Role of Software for Clinical Trials in Data Management

next

Comparing Land-Based Casinos and Online Casinos Which Offers the Best Experience