business resources

AI and Taxes: Vision & Design Principles

16 Oct 2025, 3:31 pm GMT+1

AI can revolutionise tax systems, turning fragmented data into real-time insights that boost efficiency, fairness, and trust. Success depends on clear principles, sovereign control, explainable decisions, human oversight, and compliance-first design. When integrated thoughtfully, AI becomes more than automation; it drives a proactive, knowledge-driven, and accountable tax administration for the modern era.

AI has huge potential to transform tax systems, often weighed down by fragmented data. The OECD estimates tax gaps in advanced economies average 5–6% of GDP, representing hundreds of billions in lost revenue. By turning this data into real-time insights, AI can boost efficiency, fairness, and transparency.

From BRICS-plus research and national projects, I’ve seen that success relies on governance, human oversight, and trust, not just technology. Thoughtful AI integration improves revenue collection, taxpayer cooperation, and institutional legitimacy. Clear principles and strategies make tax systems proactive, knowledge-driven, and accountable.

Effective AI deployment in tax administration requires clear architectural principles that strike a balance between operational efficiency and democratic accountability, technical capability and regulatory compliance, and innovation and institutional stability.

Our design framework establishes four fundamental principles that guide all subsequent technical and operational decisions.

Creating a vision and design for a tax - AI principles and context

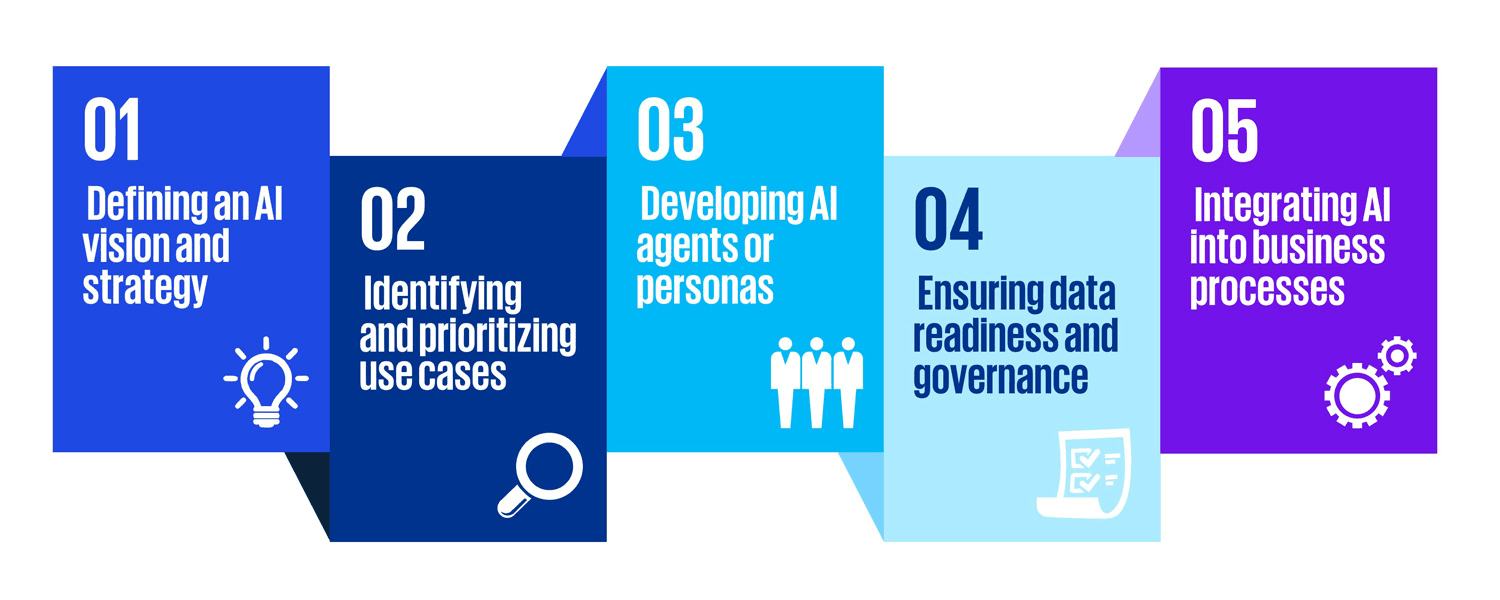

Define a clear AI vision and strategy.

- Tax leaders must set a clear AI vision: moving from reactive digital systems, mostly fragmented and mainly looking at compliance, to proactive, AI real-time insight-driven tax management.

- Strategy should link AI vision, education, clarity of governance, financial models and investments to key goals: closing the tax gap, improving efficiency, and enhancing trust through transparency.

Identifying and prioritising use cases

- Map GenAI opportunities across the tax lifecycle: core compliance (returns, VAT), specialised functions (transfer pricing, R&D credits, incentives), and advisory areas (M&A, equity compliance).

- Prioritise based on ROI, feasibility, and regulatory impact, e.g., start with document summarisation for rulings, or audit risk scoring.

Developing AI agents or personas

- Build closed AI copilots for tax officers, auditors, and policy analysts.

- Example personas: Audit Risk Copilot, Policy Simulation Agent, Casework Summariser, E-invoicing Fraud Detector.

- These AI agents serve as decision-support partners, not merely as automation tools.

Ensuring data readiness and governance

- AI success in tax depends on 20 years of high-quality financial and compliance data.

- Must ensure structured ingestion (e-invoices, customs, payroll, corporate registries) and implement governance controls: fairness, explainability, and compliance with EU AI Act/UK Playbook.

Integrating AI into business processes

- Embed AI outputs directly into daily workflows: case management systems, taxpayer service desks, compliance dashboards.

- For example: AI flags high-risk VAT transactions → the auditor receives an explanation and an evidence trail → the decision is logged in the case system.

- Ensures GenAI is not a siloed tool, but part of the end-to-end tax function.

Big picture

Together, it is essential to look at the integration of AI in taxes with the following areas:

- What: GenAI can support almost every tax sub-domain (from core compliance to M&A).

- How: A five-step maturity model strategy, use-case prioritisation, AI agents, governance, and process integration.

This positions AI not just as automation, but as the backbone of a knowledge-driven, proactive, and fair tax system.

AI systems and related systems

Principle 1: Closed & Sovereign by Default

All AI systems, data processing, and analytical capabilities must operate within national boundaries and be subject to direct governmental control. This principle addresses both security concerns and democratic governance requirements by ensuring complete national autonomy over critical fiscal infrastructure.

Sovereign deployment eliminates dependencies on foreign cloud providers, reduces cybersecurity risks, and ensures compliance with data localisation requirements across jurisdictions. Technical implementation utilises on-premises infrastructure or sovereign cloud providers that operate under national jurisdiction with clear legal frameworks for data protection and access control.

The closed architecture approach enables customisation for national legal frameworks, cultural contexts, and operational requirements that generic international solutions cannot address. Local model training on domestic data produces superior performance for jurisdiction-specific compliance patterns, legal interpretations, and economic behaviours.

Data sovereignty extends beyond physical hosting to encompass algorithmic sovereignty. Models must be trained, validated, and maintained using domestic expertise and infrastructure, creating institutional capability that supports long-term strategic autonomy in digital governance.

Implementation requires a substantial initial investment in infrastructure and expertise, but generates long-term benefits through reduced operational dependencies, enhanced security, and improved performance for local use cases. The BRICS-plus research confirms that nations with stronger sovereign AI capabilities achieve better sustained outcomes from tax administration modernisation.

Principle 2: Explainable by Design

Every AI system decision must be accompanied by clear, comprehensible explanations suitable for different stakeholder audiences, including taxpayers, tax officials, and judicial review processes. This requirement transforms traditional "black box" machine learning into transparent, accountable decision-making systems.

Technical implementation utilises multiple explainability techniques, depending on the model architecture and use-case requirements. SHAP (SHapley Additive explanations) values decompose individual predictions into feature contributions, LIME (Local Interpretable Model-agnostic Explanations) provides local approximations for complex models, and attention mechanisms in neural networks reveal which inputs influenced specific outputs.

Explanation quality varies by audience requirements. Technical staff receive detailed feature importance scores and model performance metrics. Operational staff receive narrative summaries that highlight key risk factors and supporting evidence. Citizens and their representatives access simplified explanations that respect privacy whilst providing sufficient detail for understanding and potential challenge.

Explainability operates at multiple system levels: individual predictions include feature attributions and confidence intervals, model performance includes bias testing and fairness metrics, and system behaviour provides audit trails and decision logging. This comprehensive approach supports both operational transparency and regulatory compliance.

The Australian Taxation Office's experience with explainable AI demonstrates that transparent systems achieve better compliance outcomes through enhanced taxpayer trust and cooperation. Clear explanations reduce appeals and disputes whilst improving voluntary compliance rates.

Principle 3: Human-in-the-Loop

AI systems augment, rather than replace, human judgment, particularly for high-stakes decisions that affect taxpayer rights or significant revenue amounts. This principle recognises the complementary strengths between human expertise and machine capabilities, while ensuring democratic accountability for consequential decisions.

Implementation employs graduated automation based on decision impact and complexity. Routine tasks (standard risk scoring, basic compliance checks, information retrieval) operate with minimal human oversight. Complex cases (unusual patterns, significant amounts, novel legal issues) require active human review. Critical decisions (major audits, penalties, policy recommendations) demand explicit human approval.

Human oversight mechanisms include confidence thresholds that trigger human review, quality requirements for explanations of automated decisions, and escalation procedures for cases that exceed normal parameters. These systems ensure that human expertise remains central to decision-making whilst enabling AI systems to handle routine work that would otherwise overwhelm human analysts.

Training and support systems enable human staff to collaborate effectively with AI systems. Includes understanding model capabilities and limitations, interpreting explanations and confidence measures, and maintaining professional judgment when AI recommendations conflict with human assessment.

The BRICS-plus research demonstrates that tax administrations achieving the best outcomes from AI deployment maintain strong human-AI collaboration rather than pursuing full automation. Human oversight provides essential contextual understanding and ethical reasoning that current AI systems cannot replicate.

Principle 4: Compliance-First Design

System architecture anticipates and exceeds regulatory requirements rather than retrofitting compliance capabilities. This proactive approach reduces implementation risks whilst building institutional legitimacy through demonstrated commitment to democratic governance principles.

EU AI Act compliance necessitates comprehensive risk management systems for high-risk applications, including the selection of audits. Implementation includes documented risk assessments, bias testing procedures, human oversight mechanisms, and provisions for citizen rights. These requirements are integrated into the system design rather than being added as external constraints.

UK AI Playbook guidance emphasises procurement standards, deployment monitoring, and performance measurement that inform system architecture decisions. Automated compliance reporting, audit trail generation, and performance dashboards become core system capabilities rather than additional requirements.

Privacy protection operates through multiple defensive layers, including differential privacy for statistical releases, homomorphic encryption for specific analytical workloads, and comprehensive access control systems. These protections exceed minimum legal requirements whilst maintaining analytical capability.

Audit capabilities include comprehensive decision logging, model performance monitoring, bias detection systems, and processes for handling citizen complaints. These systems provide evidence for regulatory compliance whilst supporting continuous improvement and institutional accountability.

The compliance-first approach recognises that sustainable AI deployment requires public trust and institutional legitimacy. Technical capability alone cannot deliver lasting transformation without accompanying governance frameworks that ensure democratic accountability and protect citizen rights.

Integration and Implementation

These four principles operate synergistically rather than creating conflicting requirements. Sovereign deployment supports both security and compliance objectives whilst explainable systems enable effective human oversight. Compliance frameworks provide a structure for responsible deployment, while human-in-the-loop processes ensure democratic accountability.

Technical architecture decisions flow from these principles rather than driving them. Model selection prioritises explainability alongside accuracy, infrastructure design emphasises sovereignty alongside performance, and operational procedures ensure human oversight alongside efficiency.

Success metrics include both technical performance (accuracy, efficiency, reliability) and governance outcomes (transparency, accountability, citizen trust). This balanced approach creates a sustainable AI deployment that delivers operational benefits whilst maintaining democratic legitimacy.

The BRICS-plus research confirms that nations implementing comprehensive governance frameworks for AI deployment achieve better long-term outcomes than those prioritising technical capability alone. Institutional trust and regulatory compliance create enabling conditions for ambitious AI deployment that delivers substantial public benefits.

Share this

Dinis Guarda

Author

Dinis Guarda is an author, entrepreneur, founder CEO of ztudium, Businessabc, citiesabc.com and Wisdomia.ai. Dinis is an AI leader, researcher and creator who has been building proprietary solutions based on technologies like digital twins, 3D, spatial computing, AR/VR/MR. Dinis is also an author of multiple books, including "4IR AI Blockchain Fintech IoT Reinventing a Nation" and others. Dinis has been collaborating with the likes of UN / UNITAR, UNESCO, European Space Agency, IBM, Siemens, Mastercard, and governments like USAID, and Malaysia Government to mention a few. He has been a guest lecturer at business schools such as Copenhagen Business School. Dinis is ranked as one of the most influential people and thought leaders in Thinkers360 / Rise Global’s The Artificial Intelligence Power 100, Top 10 Thought leaders in AI, smart cities, metaverse, blockchain, fintech.

previous

How Investing in the Right Vehicles Can Improve Daily Operations and Safety

next

Best Free and Paid Tools to Clean Your Device Safely