business resources

Understanding AI: What It Is and Why It Matters

15 Sept 2025, 5:01 pm GMT+1

Artificial Intelligence is a story of vision, setbacks, and breakthroughs. From Turing’s questions to ChatGPT’s rise, AI has been on a meteoric rise and is projected to have a $15.7 trillion impact by 2030. But beyond algorithms and machines, the real question is: how will we, as humans, shape and govern this transformative force for the overall benefit of humanity?

Artificial Intelligence (AI) is everywhere, shaping how we live, work, and connect. When I speak with entrepreneurs, leaders, and policymakers, I often find that while everyone has heard of AI, not everyone truly understands what it means in practical terms.

According to PwC, AI will contribute up to $15.7 trillion to the global economy by 2030, making it one of the most transformative technologies of our time. In fact, a report by McKinsey shows that companies adopting AI at scale are already seeing profit margins 3–15% higher than their peers.

But beyond the numbers, AI touches us in more personal ways every single day. From the way Google helps us find answers, Netflix suggests our next film, or Alexa responds to our voice commands, AI is quietly woven into the fabric of modern life. And yet, its definition is not as simple as many might think.

So, let’s take a closer look at what AI really is, why it matters, and how it continues to evolve.

What do we mean by Artificial Intelligence?

The International Organisation for Standardisation (ISO) defines AI as “a technical and scientific field devoted to the engineered system that generates outputs such as content, forecasts, recommendations or decisions for a given set of human-defined objectives.”

This may sound very technical, so let’s break it down.

AI is simply about creating machines and software capable of performing tasks that usually need human intelligence, such as reasoning, learning, problem-solving, or making decisions. Crucially, AI can process and analyse huge amounts of data at speeds and scales that no human ever could.

Russell and Norvig, two of the most respected figures in the field, describe AI as “intelligent agents that receive inputs (percepts) from their environment and take actions that influence it.” In practice, this spans everything from Google’s search engine algorithms to self-driving cars and robotics.

For everyday people, AI shows up in very simple ways:

- Voice recognition through assistants like Alexa and Siri.

- Language translation on Google Translate.

- Navigation through apps such as Waze or Citymapper.

- Content recommendations on platforms like Spotify, YouTube, and Netflix.

At the more ambitious end, many researchers are working towards Artificial General Intelligence (AGI), AI systems that can match or even surpass human intelligence across a wide range of tasks. Whether AGI is achievable remains a subject of much debate.

AI is broad, spanning computer science, data analytics, linguistics, philosophy, neuroscience, psychology, and more. It is constantly evolving, and what counted as AI 20 years ago may not be considered AI today.

AI ABC: Starting with the Basics

Although AI can feel complex, knowing a few core terms makes it easier to follow.

- Neural Networks: The foundation of deep learning, loosely modelled on the brain. They consist of artificial “neurons” connected through algorithms. When trained properly, they can recognise patterns, such as identifying a cat in a photo.

- Machine Learning (ML): Uses statistics to detect patterns in data. It powers recommendation systems on Netflix or Spotify, search engines, and voice assistants.

- Deep Learning: An advanced form of ML that uses massive datasets and neural networks to detect even subtle patterns. It underpins image recognition, advanced translation, and more.

- Generative AI: The breakthrough of recent years. Tools like ChatGPT create text, while others generate images, music, and even video. Generative AI is now being applied in industries from drug discovery to marketing.

- Large Language Models (LLMs): A subset of generative AI. These are trained on huge amounts of text and can generate human-like responses. ChatGPT is one well-known example.

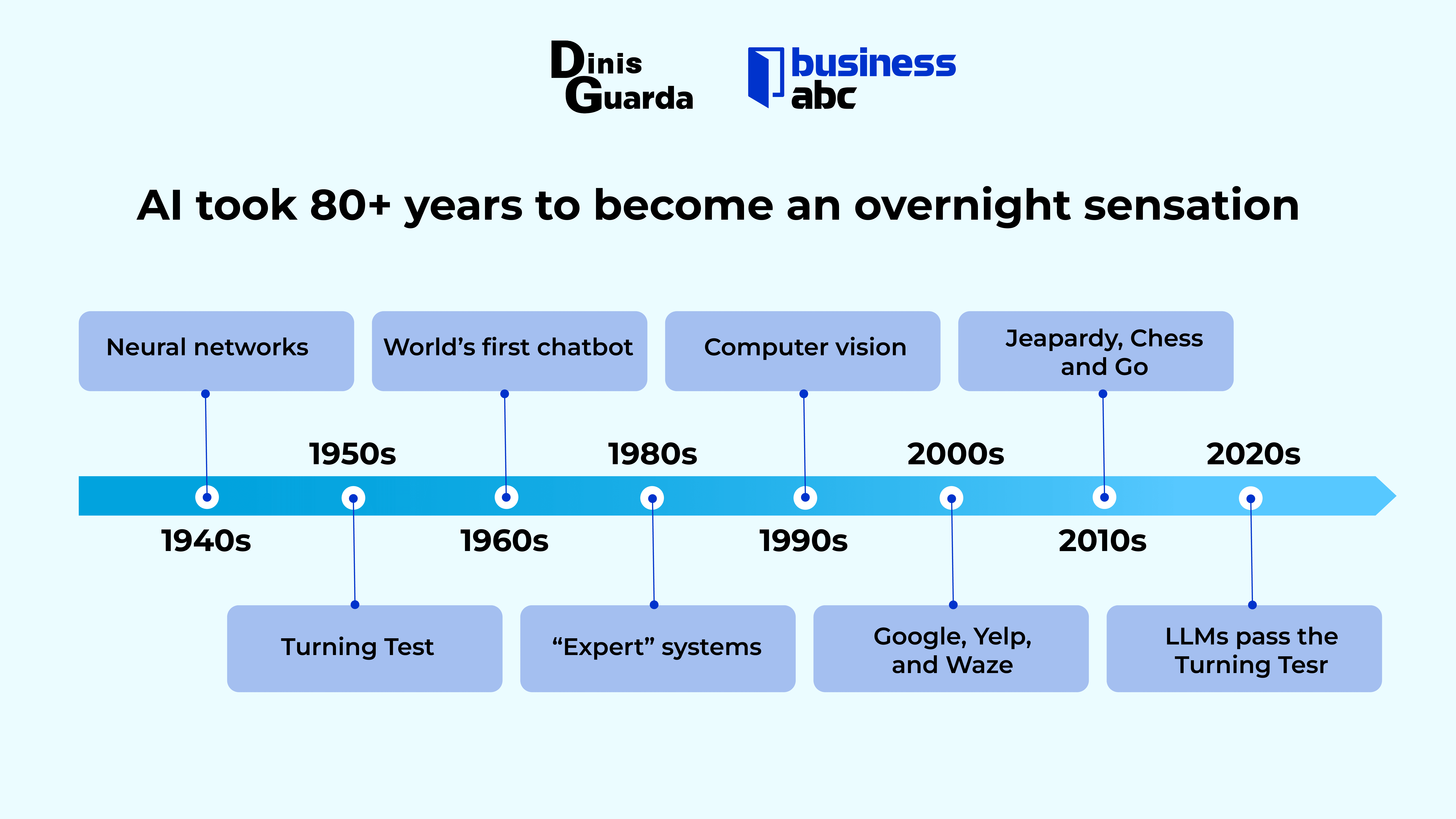

A brief history of AI

Artificial Intelligence has a long and fascinating history. The dream of creating intelligent machines is not new; it stretches back centuries, with roots in both philosophy and science fiction, before becoming a scientific discipline in the mid-20th century.

Early ideas and foundations (19th–1950s)

The story begins in the 1800s with visionaries like Ada Lovelace and Charles Babbage, who designed the Analytical Engine, a mechanical computer. Lovelace speculated that such a machine could be programmed to carry out tasks useful to humans, an idea that foreshadowed AI.

In the 1950s, British mathematician Alan Turing asked a bold question: “Can machines think?” He proposed the Turing Test as a way of measuring machine intelligence; if a computer could hold a conversation indistinguishable from that of a human, it could be considered intelligent. Though controversial, this concept became a cornerstone of AI philosophy.

The birth of AI (1956 Dartmouth Conference)

The official birth of AI as a field came in 1956, at the Dartmouth Conference in the United States. Researchers like John McCarthy (who coined the term “artificial intelligence”), Marvin Minsky, Allen Newell, and Herbert Simon set an ambitious goal: to create machines capable of language, problem-solving, and learning.

This period, known as the golden age of symbolic AI, produced early successes such as simple problem solvers, chess-playing programs, and early natural language processors. Optimism was high, with researchers predicting human-level AI within a generation.

Growth and challenges (1960s–1980s)

The 1960s and 1970s saw rapid experimentation:

- ELIZA (1966), an early chatbot by Joseph Weizenbaum at MIT, simulated a psychotherapist and surprised users who believed they were speaking to a human.

- Governments and universities funded ambitious projects in robotics, expert systems, and machine translation.

But progress slowed. AI systems often failed outside controlled lab environments, and funding dried up. This led to the first AI Winter in the 1970s, when interest and investment collapsed due to unfulfilled promises.

In the 1980s, AI enjoyed a revival with expert systems, programs designed to mimic the decision-making of specialists in areas like medicine and engineering. However, these systems were expensive and rigid, leading to another downturn by the late 1980s.

Revival and breakthroughs (1990s–2000s)

The 1990s brought new hope. Advances in computing power and algorithms reignited progress:

- 1997: IBM’s Deep Blue defeated world chess champion Garry Kasparov, a landmark moment that showed machines could outperform humans in specific intellectual tasks.

- The early 2000s introduced consumer-facing AI, such as the Roomba vacuum robot and early speech recognition software, making AI a practical reality in homes.

The era of big data and deep learning (2010s)

The 2010s marked a turning point. With the rise of big data, more powerful processors (GPUs), and advances in algorithms, AI achieved breakthroughs once thought impossible.

- Image recognition surpassed human-level accuracy.

- AI-powered voice assistants like Apple’s Siri (2011) and Amazon’s Alexa (2014) became household names.

- Google’s DeepMind developed AlphaGo, which defeated the world champion in the complex game of Go in 2016, stunning experts who believed such progress was decades away.

Generative AI and the modern era (2020s)

The past few years have seen AI move from specialist labs into the public spotlight.

- In 2022, OpenAI released ChatGPT, based on GPT-3, which could write essays, code, and even hold natural conversations.

- Image generation models like DALL·E 2 and Stable Diffusion allowed anyone to create realistic artwork from text prompts.

- Industries from healthcare to finance began experimenting with AI to accelerate research, automate operations, and personalise services.

This explosion of generative AI has sparked both excitement and debate. While some see it as a tool to boost productivity and creativity, others warn about risks, from misinformation to job disruption.

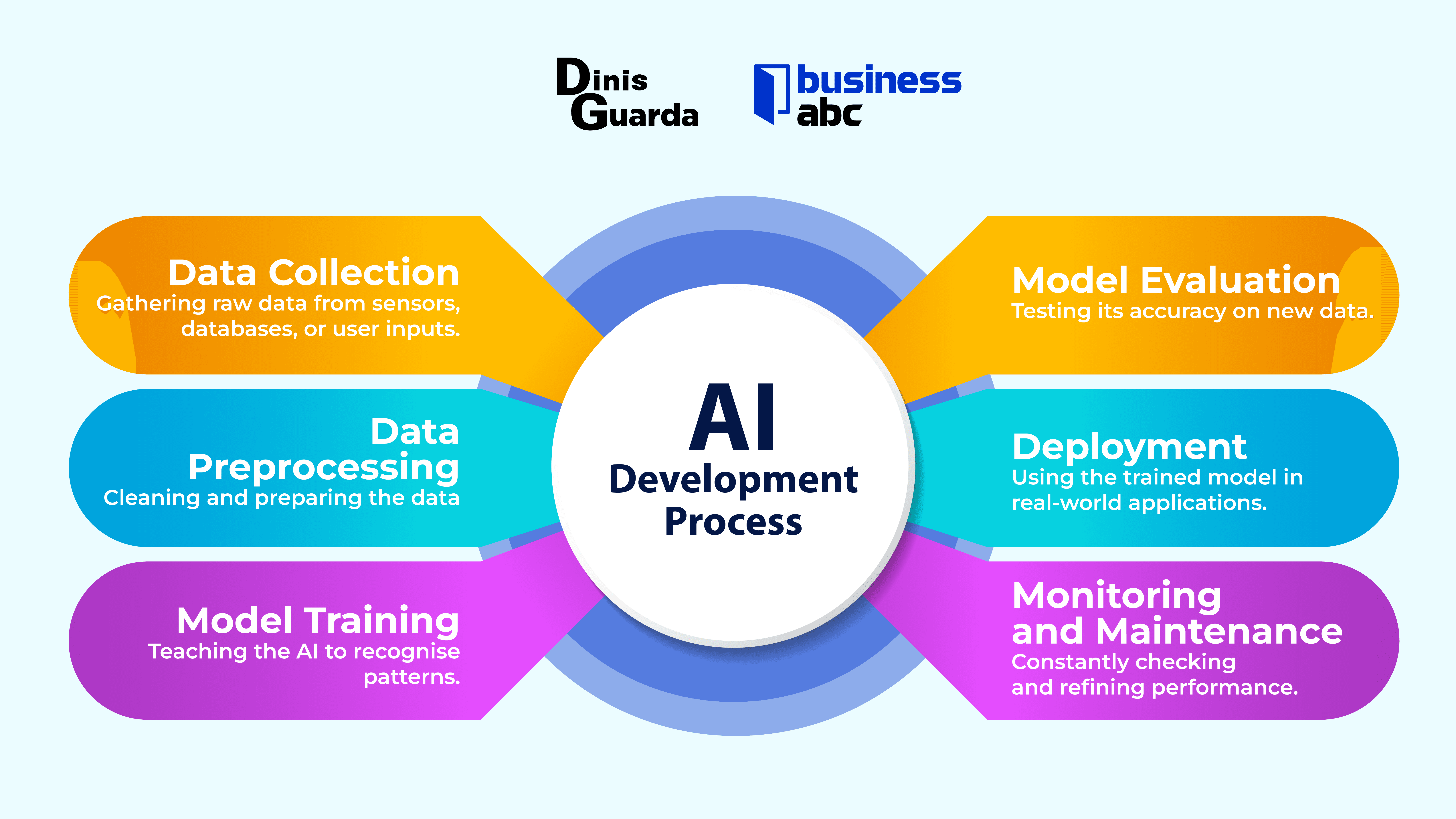

How does AI actually work?

AI systems go through several stages:

- Data Collection – Gathering raw data from sensors, databases, or user inputs.

- Data Preprocessing – Cleaning and preparing the data.

- Model Training – Teaching the AI to recognise patterns.

- Model Evaluation – Testing its accuracy on new data.

- Deployment – Using the trained model in real-world applications.

- Monitoring and Maintenance – Constantly checking and refining performance.

This cycle allows AI to learn, adapt, and improve continuously.

AI in business: Practical applications

Today, businesses rely heavily on AI to streamline operations, improve customer experiences, and drive revenue. Some key applications include:

- Machine Learning Algorithms – For predictive analytics, fraud detection, and customer segmentation.

- Natural Language Processing (NLP) – Powering chatbots, sentiment analysis, and automated customer support.

- Computer Vision – Used in retail for inventory, healthcare for medical imaging, and security for facial recognition.

- Predictive Analytics – Helping firms forecast demand, sales trends, and financial risks.

- Optimisation Algorithms – Improving logistics, scheduling, and supply chain efficiency.

- Recommendation Engines – Personalising customer journeys in e-commerce, streaming, and online services.

- Anomaly Detection – Identifying fraud, cybersecurity threats, or irregular patterns in data.

The impact is clear: AI enables companies to be faster, more efficient, and more responsive to customer needs.

Future of AI: Why does it matter for your business?

The future will see a significant shift in business, and businesses should get ready to embrace a future driven by AI, through education and implementation. The learning pathways will split into two distinct tracks: one focused on operating AI systems and the other on creating and developing them.

Simultaneously, the investment landscape is evolving, as traditional corporate structures are increasingly replaced by networks of solo entrepreneurs empowered by AI tools, reshaping how businesses are built and scaled.

AI isn’t merely a technological update; It is fundamentally transforming how we work, create value, and define success. In the coming years, it will become clear who the true innovators are and who risks being left behind. Those leaders who grasp this shift won’t just adapt—they’ll reinvent entire industries from scratch. The AI revolution isn’t a distant prospect; it’s already underway, quietly reshaping every sector it touches. AI isn’t just another tool—it’s a total reimagining of how business operates.

Businesses must prepare for this evolution, rethinking processes that could benefit from robots operating in tandem with human teams. Policy-makers need to update regulations, ensuring the responsible deployment of technology that respects safety, privacy, and workforce well-being. Researchers should continue bridging the gap between physical perception and cognitive-level reasoning, ensuring robust, adaptable, and ethical AI systems.

Share this

Dinis Guarda

Author

Dinis Guarda is an author, entrepreneur, founder CEO of ztudium, Businessabc, citiesabc.com and Wisdomia.ai. Dinis is an AI leader, researcher and creator who has been building proprietary solutions based on technologies like digital twins, 3D, spatial computing, AR/VR/MR. Dinis is also an author of multiple books, including "4IR AI Blockchain Fintech IoT Reinventing a Nation" and others. Dinis has been collaborating with the likes of UN / UNITAR, UNESCO, European Space Agency, IBM, Siemens, Mastercard, and governments like USAID, and Malaysia Government to mention a few. He has been a guest lecturer at business schools such as Copenhagen Business School. Dinis is ranked as one of the most influential people and thought leaders in Thinkers360 / Rise Global’s The Artificial Intelligence Power 100, Top 10 Thought leaders in AI, smart cities, metaverse, blockchain, fintech.

previous

Opportunities for Buying and Selling Online Businesses in Europe

next

5 Essential Accounting Tips for Small Business Owners